Hadoop Administrator Online Training

The course Hadoop Administration from Inflametech provides with all the skills in order to successful work as a Hadoop Administrator and also provides expertise in all the steps necessary to manage a Hadoop cluster

Understanding Hadoop Administration is a highly valuable skill for anyone working at companies with Hadoop Clusters to store and process data. Almost every large company you might want to work at uses Hadoop in some way, including Google, Amazon, Facebook, Ebay, LinkedIn, IBM, Spotify, Twitter, and Yahoo! And it’s not just technology companies that need Hadoop; even the New York Times uses Hadoop for processing images. And Now you can understand if the companies are using Hadoop for storing, analyzing and processing data then there will be a requirement for Hadoop Administrator.

In collaboration with

Online Class

Projects

Hands-On

n/a

25 Hrs Instructor-led Training

Mock Interview Session

Project Work & Exercises

Flexible Schedule

24 x 7 Lifetime Support & Access

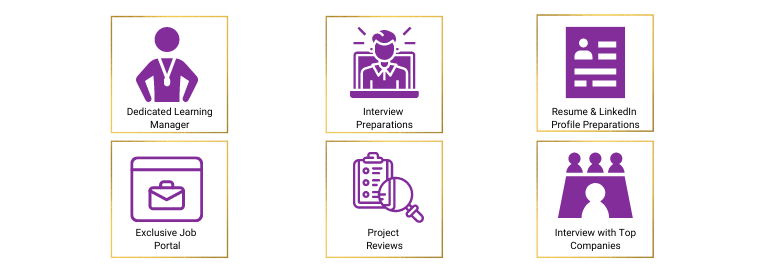

Certification and Job Assistance

Course Benefits

Hadoop Administration Certification Course Overview

- Setup a machine in VirtualBox

- How to add CentOS ISO image

- Start machines and make way to communicate

- Generating ssh key

- Format Namenode

- Demerit to store data in tmp

- Start Admin commands

- Setting the pesudo distributed mode

- Commisioning and Decommisioning of Nodes

- Working of Secondary NameNode

- Shutting down the namenode

- Using Namespace

- Backing up the data

- Restore the data

- Distcp Command

- Handle Crashing of NameNode

- Corrupt and Missing Blocks

- Getting metadat from secondary to primary location

- How to Manage a Hadoop Cluster

- How to Maintain a Hadoop Cluster

- How to Monitor a Hadoop Cluster

- How to Troubleshoot a Hadoop Cluster

- Software engineers and programmers who want to understand the administration of larger Hadoop ecosystem.

- Hadoop Developers and Java Developers who want to be a Hadoop Administrator and have interest in Big Data Field.

- Project, program, or product managers who want to understand how to manage, monitor and troubleshoot the Hadoop Cluster

- Linux / Unix Administrator, Data analysts and database administrators who are curious about Hadoop Administration part and how it relates to their work.

- Database Administrator, System architects who need to understand the components available in the Hadoop ecosystem, and how they manage and monitor them.

There are no specific prerequisites for the Hadoop Administration Training, but a basic knowledge of Linux command-line interface will be beneficial.

Mentors Pool follows a rigorous certification process. To become a certified Hadoop Administrator , you must fulfill the following criteria:

- Online Instructor-led Course:

- Successful completion of all projects, which will be evaluated by trainers

- Scoring a minimum of 60 percent in the Hadoop Administrator quiz conducted by Mentors Pool

A Hadoop Administrator certification benefits an individual in the following ways:

- Become an in-demand resource who can manage Hadoop clusters and help the organization with big data analysis.

- The Hadoop Market is expected to reach $99.31 billion by 2022 attaining a 28.5% CAGR. This is great news for those in the data analytics field

- Exposure to multiple industries like Healthcare, consumer, banking, energy, manufacturing, etc.

- A Certified Hadoop Administrator can earn an average salary of $123,169 per year as per ZipRecruiter.

Talk to Us

IN: +91-8197658094

According to Forbes Big Data & Hadoop Market is expected to reach $99.31B by 2022 growing at a CAGR of 42.1% from 2015. McKinsey predicts that by 2018 there will be a shortage of 1.5M data experts. According to Indeed Salary Data, the Average salary of Big Data Hadoop Developers is $135k

Skills Covered

Fees

Online Classroom

- 25 Hrs of Instructor-led Training

- 1:1 Doubt Resolution Sessions

- Attend as many batches for Lifetime

- Flexible Schedule

Batches

Dates

Days

Timings

Enrolment validity: Lifetime

Login

EMI Option Available with different credit cards

Cart

Corporate Training

- Customised Learning

- Enterprise grade learning management system (LMS)

- 24x7 Support

- Enterprise grade reporting

Course Content

Hadoop Administration Certification Course Content

Learning Objective :

Understanding what is Big Data and its solution for traditional Problems. You will learn about Hadoop and its core components and you will know how to read and write happens in HDFS. You will also know the roles and responsibilities of a Hadoop Administrator.

Topics :

- Introduction to big data

- Limitations of existing solutions

- Common Big Data domain scenarios

- Hadoop Architecture

- Hadoop Components and Ecosystem

- Data loading & Reading from HDFS

- Replication Rules

- Rack Awareness theory

- Hadoop cluster Administrator: Roles and Responsibilities.

Hands-on:

Writing and Reading the Data from hdfs, how to submit the job in Hadoop 1.0 and YARN.

Learning Objective :

Understanding the Installation of Hadoop Ecosystem.

Learning Objectives:

Understanding different Configuration files and building Hadoop Multi Node Cluster. Differences in Hadoop 1.0 and Hadoop 2.0. You will also get to know the architecture of Hadoop 1.0 and Hadoop2.0(YARN).

Topics

- Working of HDFS and its internals

- Hadoop Server roles and their usage

- Hadoop Installation and Initial configuration

- Different Modes of Hadoop Cluster.

- Deploying Hadoop in a Pseudo-distributed mode

- Deploying a Multi-node Hadoop cluster

- Installing Hadoop Clients

- Understanding the working of HDFS and resolving simulated problems.

- Hadoop 1 and its Core Components.

- Hadoop 2 and its Core Components.

Hands-on:

Creating Pseudo and Fully Distributed Hadoop Cluster. Changing different configuration Properties while submitting the Jobs and different hdfs admin commands.

Learning Objectives:

Understanding the various properties of Namenode, Data node, and Secondary Namenode. You will also learn how to add and decommission the data node to the cluster. You will also learn Various Processing frameworks in Hadoop and its Architecture in the context of Hadoop administrator and schedulers.

Topics:

- Properties of NameNode, DataNode and Secondary Namenode

- OS Tuning for Hadoop Performance

- Understanding Secondary Namenode

- Log Files in Hadoop

- Working with Hadoop distributed cluster

- Decommissioning or commissioning of nodes

- Different Processing Frameworks

- Understanding MapReduce

- Spark and its Features

- Application Workflow in YARN

- YARN Metrics

- YARN Capacity Scheduler and Fair Scheduler

- Understanding Schedulers and enabling them.

Hands-on:

Changing the configuration files of Secondary Namenode. Add and remove the data nodes in a Distributed Cluster. And also Changes Schedulers in run time while submitting the jobs to YARN.

Learning Objectives:

You will learn regular Cluster Administration tasks like balancing data in the cluster, protecting data by enabling trash, attempting a manual failover, creating backup within or across clusters

Topics:

- Namenode Federation in Hadoop

- HDFS Balancer

- High Availability in Hadoop

- Enabling Trash Functionality

- Checkpointing in Hadoop

- DistCP and Disk Balancer.

Hands-on:

Works with Cluster Administration and Maintenance tasks. Runs DistCP and HDFS Balancer Commands to get even distribution of the data.

Learning Objectives:

You will learn how to take Backup and recovery of data in master and slaves. You will also learn about allocating Quota to the master and slaves files.

Topics:

- Key Admin commands like DFSADMIN

- Safemode

- Importing Check Point

- MetaSave command

- Data backup and recovery

- Backup vs Disaster recovery

- Namespace count quota or space quota

- Manual failover or metadata recovery.

Hands-on:

Do regular backup using MetaSave commands. You will also run commands to do data Recovery using Checkpoints.

Learning Objective:

You will understand about Cluster Planning and Managing, what are the aspects you need to think about when planning a setup of a new cluster.

Topics :

- Planning a Hadoop 2.0 cluster

- Cluster sizing

- Hardware

- Network and Software considerations

- Popular Hadoop distributions

- Workload and usage patterns

- Industry recommendations.

Hands-on:

Setting up a new Cluster and scaling Dynamically. Login to different Hadoop distributions online.

Learning Objectives:

You will get to know about the Hadoop cluster monitoring and security concepts. You will also learn how to secure a Hadoop cluster with Kerberos.

Topics :

- Monitoring Hadoop Clusters

- Authentication & Authorization

- Nagios and Ganglia

- Hadoop Security System Concepts

- Securing a Hadoop Cluster With Kerberos

- Common Misconfigurations

- Overview on Kerberos

- Checking log files to understand Hadoop clusters for troubleshooting.

Hands-on:

Monitor the cluster and also authorization of Hadoop resource by granting tickets using Kerberos.

Learning Objectives:

You will learn how to configure Hadoop2 with high availability and upgrading. You will also learn how to work with the Hadoop ecosystem.

Topics :

- Configuring Hadoop 2 with high availability

- Upgrading to Hadoop 2

- Working with Sqoop

- Understanding Oozie

- Working with Hive.

- Working with Pig.

Hands-on:

Login to the Hive and Pig shell with their respective commands. You will also schedule OOZIE Job.

Learning Objectives:

You will see how to work with CDH and its administration tool Cloudera Manager. You will also learn ecosystem administration and its optimization.

Topics:

- Cloudera Manager and cluster setup

- Hive administration

- HBase architecture

- HBase setup

- Hadoop/Hive/Hbase performance optimization.

- Pig setup and working with a grunt.

Hands-on:

Install CDH and works with Cloudera Manager. Install new parcel in CDH machine.

Course Projects

Automatic Scaling up the Data Node

Based on the alerts in the cluster, automatically a new datanode should add on the fly when it reaches a limit.

Tool upgradation of Hadoop Ecosystem

Hadoop ecosystem tools installation and upgradation from end to end.

Streaming Twitter Data using Flume

As a part of the project, deploy Apache Flume to exact Twitter streaming data and get it into Hadoop for analysis. Also handle high volume data spikes and horizontal data scaling to accommodate increased data volumes

Course Certification

A Hadoop administrator administers and manages the set of Hadoop clusters. A Hadoop administrator’s responsibilities include setting up Hadoop clusters, backup, recovery and maintenance of the clusters. Good knowledge of Hadoop architecture is required to become a Hadoop administrator. Some of the key responsibilities of a Hadoop Administrator are:

- Takes care of the day-to-day running of Hadoop Clusters.

- Makes sure that Hadoop cluster is running all the time.

- Responsible for managing and reviewing Hadoop log files.

- Responsible for capacity planning and estimating the requirements.

- Implementation of ongoing Hadoop infrastructure.

- Cluster maintenance along with the creation and removal of nodes.

- Keeping an eye on Hadoop cluster security and connectivity.

- Tuning the performance of Hadoop clusters.

- Managing and reviewing the log files of Hadoop.

Hadoop mainly consists of three layers:

- HDFS (Hadoop Distributed File System): the place where all the data is stored,

- Application layer (on which the MapReduce engine sits): to process the stored data, and

- YARN: which allocates the resources to various slaves. All of these are operating on Master and Slave nodes.

Hadoop is an open-source framework written in Java that enables the distributed processing of large datasets. Hadoop is not a programming language.

Hadoop can store and process many unstructured datasets that are distributed across various clusters using simple program models. It breaks up unstructured data and distributes it into several parts for side by side data analysis. Rather than relying on one computer, the library is designed for detecting and handling failures of the application layer, thereby delivering a high-quality service on top of a cluster of computers. On top of these, Hadoop is an open-source framework available to everyone.

If Big Data is the problem, Hadoop can be said to be the solution. The Hadoop framework can be used for storing and processing big data that is present in large clusters in an organized manner. Hadoop segregates the big data into small parts and stores them separately on different servers present in a particular network. It is highly efficient and can handle large volumes of data. So, with knowledge of Hadoop, you can work on Big Data quickly and efficiently.

Hadoop is not a database; it is a software ecosystem that allows parallel computing on a vast scale. Hadoop enables specific types of NoSQL distributed databases (e.g. HBase) to spread the data across thousands of servers without affecting the quality.

Certification Course Reviews

Certification Course FAQs

Hadoop is the most favoured and in-demand Big Data tool worldwide. It is popular due to the following attributes:

- Power of Computing-

The distributed computing model in Hadoop processes big data very fast. The power of computing will be more if the number of nodes you use is more.

- Massive data storing and processing-

This tool lets organizations store and process massive amounts of any type of data quickly.

- Zero Fault tolerance rate-

HDFS is highly fault tolerant and handles faults by the process of replica creation. In case a node goes down, the tasks are redirected to other nodes to ensure that the distributed computing doesn’t fail, and fault tolerance rate will be zero.

- Cheapest Price-

It is an open-source framework that uses commodity hardware to store and process large amounts of data.

- More Flexibility-

Hadoop allows data processing before storing it, unlike in a traditional relational database.

- Scalability-

You can extend your system easily if you are required to handle more data by adding nodes with the help of the administration.

The following are the top five organizations that are using Hadoop:

- Marks and Spencer

- Royal Bank of Scotland

- Expedia

- British Airways

- Royal Mail

Hadoop is a crucial tool in Data Science, and Data Scientists who have knowledge of Hadoop are highly sought after. Given below are the reasons why Data Scientists use Hadoop:

- Transparent Parallelism

With Hadoop, Data Scientists can write Java-based MapReduce code and use other big data tools in parallel.

- Data transport is easy

Hadoop helps Data Scientists in transporting the data to different nodes on a system at a faster rate.

- Load data into Hadoop first

The very first thing data scientists can do is to load data into Hadoop. For this, they need not do any transformations to get the data into the cluster.

- Easy data exploration

With Hadoop, Data Scientists can easily explore and figure out the complexities in the data.

- Data Filtering

Hadoop helps data scientists to filter a subset of data based on requirements and address a specific business problem.

- Sampling for data modelling

Sampling in Hadoop gives a hint to Data Scientists on what approach might work best for data modelling.

Most of the companies are looking for candidates who can handle their requirements. Hadoop Administration training is the best way to demonstrate to your employer that you belong to the category of niche professionals who can make a difference.

Today organizations need Hadoop administrators to take care of large Hadoop clusters. Top companies like Facebook, eBay, Twitter, etc are using Hadoop. The professionals with Hadoop skills are in huge demand. According to Payscale, the average salary for Hadoop Administrators is $121k.

The following are different types of companies that hire Hadoop Administrator Professionals:

- IBM

- Amazon

- Infosys

- Vodafone

- Data Labs

- Capgemini

- UnitedHealth Group

- Cognizant Softvision