Apache Spark & Scala Online Training

Designed to meet the industry benchmarks, Mentors Pool Apache Spark and Scala certification is curated by top industry experts. This Apache Spark training is created to help you master Apache Spark and the Spark Ecosystem, which includes Spark RDD, Spark SQL, and Spark MLlib. This Apache Spark training is live, instructor-led & helps you master key Apache Spark concepts, with hands-on demonstrations. This Apache Spark course is fully immersive where you can learn and interact with the instructor and your peers. Enroll now in this Scala online training.

In collaboration with

Online Class

Projects

Hands-On

n/a

24 Hrs Instructor-led Training

Mock Interview Session

Project Work & Exercises

Flexible Schedule

24 x 7 Lifetime Support & Access

Certification and Job Assistance

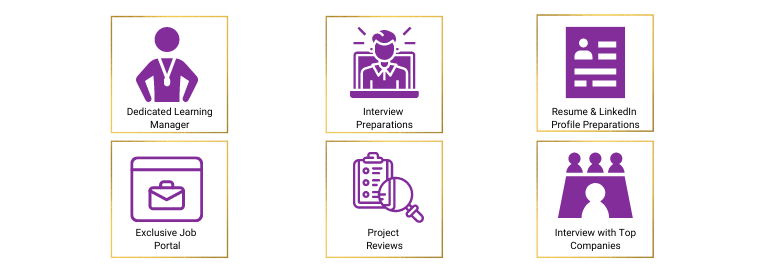

Course Benefits

Apache Spark & Scala Online Training Course Overview

- Frame big data analysis problems as Apache Spark scripts

- Develop distributed code using the Scala programming language

- Optimize Spark jobs through partitioning, caching, and other techniques

- Build, deploy, and run Spark scripts on Hadoop clusters

- Process continual streams of data with Spark Streaming

- Transform structured data using SparkSQL, DataSets, and DataFrames

- Traverse and analyze graph structures using GraphX

- Analyze massive data set with Machine Learning on Spark

- Software engineers who want to expand their skills into the world of big data processing on a cluster

- If you have no previous programming or scripting experience, you’ll want to take an introductory programming course first.

- Basic understanding of SQL, any database, and query language for databases.

- It is not mandatory, but helpful for you to have working knowledge of Linux or Unix-based systems.

- Also, it is recommended to have a certification training on Big Data Hadoop Development.

Mentors Pool follows a rigorous certification process. To become a certified Apache Spark & Scala , you must fulfill the following criteria:

- Online Instructor-led Course:

- Successful completion of all projects, which will be evaluated by trainers

- Scoring a minimum of 60 percent in the Apache Spark & Scala quiz conducted by Mentors Pool

According to the Data Science Salary Survey by O’Reilly, there exists a strong link between professionals who utilize Spark and Scala and the change in their salaries. The survey has shown that professionals with Apache Spark skills added $11,000 to the median or average salary, while Scala programming language affected an increase of $4000 to the bottom line of a professional’s salary.

Apache Spark developers have been known to earn the highest average salary among other programmers utilizing ten of the most prominent Hadoop development tools. Real-time big data applications are going mainstream faster and enterprises are generating data at an unforeseen and rapid rate. This is the best time for professionals to learn Apache Spark online and help companies progress in complex data analysis.

Talk to Us

IN: +91-8197658094

The average pay stands at $108,366 – Indeed.com Spark is popular in leading companies including Microsoft, Amazon and IBM. LinkedIn, Twitter and Netflix are few companies using Scala ,Global Spark market revenue will grow to $4.2 billion by 2022 with a CAGR of 67% – Marketanalysis.com

Skills Covered

Fees

Online Classroom

- 24 Hrs of Instructor-led Training

- 1:1 Doubt Resolution Sessions

- Attend as many batches for Lifetime

- Flexible Schedule

Batches

Dates

Days

Timings

Enrolment validity: Lifetime

Login

EMI Option Available with different credit cards

Cart

Corporate Training

- Customised Learning

- Enterprise grade learning management system (LMS)

- 24x7 Support

- Enterprise grade reporting

Course Content

Tpoic Name 1

First Lesson

ABC

Apache Spark & Scala Online Training Course Content

Learning Objectives: Understand Big Data and its components such as HDFS. You will learn about the Hadoop Cluster Architecture. You will also get an introduction to Spark and the difference between batch processing and real-time processing.

Topics:

- What is Big Data?

- Big Data Customer Scenarios

- What is Hadoop?

- Hadoop’s Key Characteristics

- Hadoop Ecosystem and HDFS

- Hadoop Core Components

- Rack Awareness and Block Replication

- YARN and its Advantage

- Hadoop Cluster and its Architecture

- Hadoop: Different Cluster Modes

- Big Data Analytics with Batch & Real-time Processing

- Why Spark is needed?

- What is Spark?

- How Spark differs from other frameworks?

Hands-on: Scala REPL Detailed Demo.

Learning Objectives: Learn the basics of Scala that are required for programming Spark applications. Also learn about the basic constructs of Scala such as variable types, control structures, collections such as Array, ArrayBuffer, Map, Lists, and many more.

Topics:

- What is Scala?

- Why Scala for Spark?

- Scala in other Frameworks

- Introduction to Scala REPL

- Basic Scala Operations

- Variable Types in Scala

- Control Structures in Scala

- Foreach loop, Functions and Procedures

- Collections in Scala- Array

- ArrayBuffer, Map, Tuples, Lists, and more

Hands-on: Scala REPL Detailed Demo

Learning Objectives: Learn about object-oriented programming and functional programming techniques in Scala.

Topics

- Variables in Scala

- Methods, classes, and objects in Scala

- Packages and package objects

- Traits and trait linearization

- Java Interoperability

- Introduction to functional programming

- Functional Scala for the data scientists

- Why functional programming and Scala are important for learning Spark?

- Pure functions and higher-order functions

- Using higher-order functions

- Error handling in functional Scala

- Functional programming and data mutability

Hands-on: OOPs Concepts- Functional Programming

Learning Objectives: Learn about the Scala collection APIs, types and hierarchies. Also, learn about performance characteristics.

Topics

- Scala collection APIs

- Types and hierarchies

- Performance characteristics

- Java interoperability

- Using Scala implicits

Learning Objectives: Understand Apache Spark and learn how to develop Spark applications.

Topics:

- Introduction to data analytics

- Introduction to big data

- Distributed computing using Apache Hadoop

- Introducing Apache Spark

- Apache Spark installation

- Spark Applications

- The back bone of Spark – RDD

- Loading Data

- What is Lambda

- Using the Spark shell

- Actions and Transformations

- Associative Property

- Implant on Data

- Persistence

- Caching

- Loading and Saving data

Hands-on:

- Building and Running Spark Applications

- Spark Application Web UI

- Configuring Spark Properties

Learning Objectives: Get an insight of Spark – RDDs and other RDD related manipulations for implementing business logic (Transformations, Actions, and Functions performed on RDD).

Topics

- Challenges in Existing Computing Methods

- Probable Solution & How RDD Solves the Problem

- What is RDD, Its Operations, Transformations & Actions

- Data Loading and Saving Through RDDs

- Key-Value Pair RDDs

- Other Pair RDDs, Two Pair RDDs

- RDD Lineage

- RDD Persistence

- WordCount Program Using RDD Concepts

- RDD Partitioning & How It Helps Achieve Parallelization

- Passing Functions to Spark

Hands-on:

- Loading data in RDD

- Saving data through RDDs

- RDD Transformations

- RDD Actions and Functions

- RDD Partitions

- WordCount through RDDs

Learning Objectives: Learn about SparkSQL which is used to process structured data with SQL queries, data-frames and datasets in Spark SQL along with different kinds of SQL operations performed on the data-frames. Also, learn about the Spark and Hive integration.

Topics

- Need for Spark SQL

- What is Spark SQL?

- Spark SQL Architecture

- SQL Context in Spark SQL

- User Defined Functions

- Data Frames & Datasets

- Interoperating with RDDs

- JSON and Parquet File Formats

- Loading Data through Different Sources

- Spark – Hive Integration

Hands-on:

- Spark SQL – Creating Data Frames

- Loading and Transforming Data through Different Sources

- Spark-Hive Integration

Learning Objectives: Learn why machine learning is needed, different Machine Learning techniques/algorithms, and SparK MLlib.

Topics

- Why Machine Learning?

- What is Machine Learning?

- Where Machine Learning is Used?

- Different Types of Machine Learning Techniques

- Introduction to MLlib

- Features of MLlib and MLlib Tools

- Various ML algorithms supported by MLlib

- Optimization Techniques

Learning Objectives: Implement various algorithms supported by MLlib such as Linear Regression, Decision Tree, Random Forest and so on

Topics

- Supervised Learning – Linear Regression, Logistic Regression, Decision Tree, Random Forest

- Unsupervised Learning – K-Means Clustering

Hands-on:

- Machine Learning MLlib

- K- Means Clustering

- Linear Regression

- Logistic Regression

- Decision Tree

- Random Forest

Learning Objectives: Understand Kafka and its Architecture. Also, learn about Kafka Cluster, how to configure different types of Kafka Clusters. Get introduced to Apache Flume, its architecture and how it is integrated with Apache Kafka for event processing. At the end, learn how to ingest streaming data using flume.

Topics

- Need for Kafka

- What is Kafka?

- Core Concepts of Kafka

- Kafka Architecture

- Where is Kafka Used?

- Understanding the Components of Kafka Cluster

- Configuring Kafka Cluster

- Kafka Producer and Consumer Java API

- Need of Apache Flume

- What is Apache Flume?

- Basic Flume Architecture

- Flume Sources

- Flume Sinks

- Flume Channels

- Flume Configuration

- Integrating Apache Flume and Apache Kafka

Hands-on:

- Configuring Single Node Single Broker Cluster

- Configuring Single Node Multi Broker Cluster

- Producing and consuming messages

- Flume Commands

- Setting up Flume Agent

Learning Objectives: Learn about the different streaming data sources such as Kafka and Flume. Also, learn to create a Spark streaming application.

Topics

- Apache Spark Streaming: Data Sources

- Streaming Data Source Overview

- Apache Flume and Apache Kafka Data Sources

Hands-on:

Perform Twitter Sentimental Analysis Using Spark Streaming

Learning Objectives: Learn the key concepts of Spark GraphX programming and operations along with different GraphX algorithms and their implementations.

Topics

- A brief introduction to graph theory

- GraphX

- VertexRDD and EdgeRDD

- Graph operators

- Pregel API

- PageRank

Course Projects

Adobe Analytics

Adobe Analytics processes billions of transactions a day across major web and mobile properties. In recent years they have modernised their batch processing stack by adopting new technologies like Hadoop, MapReduce, Spark etc. In this project we will see how Spark and Scala are useful in refactoring process. Spark allows you to define arbitrarily complex processing pipelines without the need for external coordination. It also has support for stateful streaming aggregations and we could reduce our latency using micro-batches of seconds instead of minutes. With the help of Scala and Spark we are able to perform a wide range of operations like batch, streaming, stateful aggregations and analytics, and ETL jobs, just to name a few.

Interactive Analytics

Apache Spark has many features like, Fog computing, IOT and MLib, GraphX etc. Among the most notable features of Apache Spark is its ability to support interactive analysis. Unlike MapReduce that supports batch processing, Apache Spark processes data faster because of which it can process exploratory queries without sampling. Spark provides an easy way to study API and also it is a strong tool for interactive data analysis. It is available in Scala. MapReduce is made to handle batch processing and SQl on Hadoop engines which are usually slow. Hence it is fast to perform any identification queries against live data without sampling and is highly interactive. Structured streaming is also a new feature that helps in web analytics by allowing customers to run user-friendly query with web visitors.

Personalizing News Pages For Web Visitors In Yahoo

Various Spark projects are running in Yahoo for different applications. For personalizing news pages, Yahoo uses ML algorithms which run on Spark to figure out what individual users are interested in, and also to categorize news stories as they arise to figure out what types of users would be interested in reading them. To do this, Yahoo wrote a Spark ML algorithm 120 lines of Scala. (Previously, its ML algorithm for news personalization was written in 15,000 lines of C++.) With just 30 minutes of training on a large, hundred million record data set, the Scala ML algorithm was ready for business.

Course Certification

Apache Spark is an open-source parallel processing framework for running large-scale data analytics applications across clustered computers. It can handle both batch and real-time analytics and data processing workloads.

Apache Spark can process data from a variety of data repositories, including the Hadoop Distributed File System (HDFS), NoSQL databases and relational data stores, such as Apache Hive. Spark supports in-memory processing to boost the performance of big data analytics applications, but it can also perform conventional disk-based processing when data sets are too large to fit into the available system memory.

The Spark Core engine uses the resilient distributed data set, or RDD, as its basic data type. The RDD is designed in such a way so as to hide much of the computational complexity from users. It aggregates data and partitions it across a server cluster, where it can then be computed and either moved to a different data store or run through an analytic model. The user doesn’t have to define where specific files are sent or what computational resources are used to store or retrieve files.

In addition, Spark can handle more than the batch processing applications that MapReduce is limited to running.

Spark Libraries

The Spark Core engine functions partly as an application programming interface (API) layer and underpins a set of related tools for managing and analyzing data. Aside from the Spark Core processing engine, the Apache Spark API environment comes packaged with some libraries of code for use in data analytics applications. These libraries include:

Spark SQL

One of the most commonly used libraries, Spark SQL enables users to query data stored in disparate applications using the common SQL language.

Spark Streaming

This library enables users to build applications that analyze and present data in real time.

MLlib

A library of machine learning code that enables users to apply advanced statistical operations to data in their Spark cluster and to build applications around these analyses.

GraphX

A built-in library of algorithms for graph-parallel computation.

Apache Spark is a general purpose cluster-computing framework that can be deployed by multiple ways like streaming data, graph processing and Machine learning.

The different components of Apache Spark are:-

Spark Libraries

The Spark Core engine functions partly as an application programming interface (API) layer and underpins a set of related tools for managing and analyzing data. Aside from the Spark Core processing engine, the Apache Spark API environment comes packaged with some libraries of code for use in data analytics applications. These libraries include:

Spark SQL

One of the most commonly used libraries, Spark SQL enables users to query data stored in disparate applications using the common SQL language.

Spark Streaming

This library enables users to build applications that analyze and present data in real time.

MLlib

A library of machine learning code that enables users to apply advanced statistical operations to data in their Spark cluster and to build applications around these analyses.

GraphX

A built-in library of algorithms for graph-parallel computation.

The main difference between Spark and Scala is that the Apache Spark is a cluster computing framework designed for fast Hadoop computation while the Scala is a general-purpose programming language that supports functional and object-oriented programming.

The advantages/benefits of Apache Spark are:-

Integration with Hadoop

Spark’s framework is built on top of the Hadoop Distributed File System (HDFS). So it’s advantageous for those who are familiar with Hadoop.

Faster

Spark also starts with the same concept of being able to run MapReduce jobs except that it first places the data into RDDs (Resilient Distributed Datasets). This data is now stored in memory so it’s more quickly accessible i.e. the same MapReduce jobs can run much faster because the data is accessed in memory.

Real-time stream processing

Every year, the real-time data being collected from various sources keeps shooting up exponentially. This is where processing and manipulating real-time data can help us. Spark helps us to analyze real-time data as and when it is collected.

Applications are fraud detection, electronic trading data, log processing in live streams (website logs), etc.

Graph Processing

Apart from Steam Processing, Spark can also be used for graph processing. From advertising to social data analysis, graph processing capture relationships in data between entities, say people and objects which are then are mapped out. This has led to recent advances in machine learning and data mining.

Powerful

Today companies manage two different systems to handle their data and hence end up building separate applications for it. One for streaming & storing real-time data. The other to manipulate and analyze this data. This means a lot of space and computational time. Spark gives us the flexibility to implement both batch and stream processing of data simultaneously, which allows organizations to simplify deployment, maintenance and application development.

Certification Course Reviews

Certification Course FAQs

These are the reasons why you should learn Apache Spark:-

- Spark can be integrated well with Hadoop and that’s a great advantage for those who are familiar with the latter.

- According to technology forecasts, Spark is the future of worldwide Big Data Processing. The standards of Big Data Analytics are rising immensely with Spark, driven by high-speed data processing and real time results.

- Spark is an in-memory data processing framework and is all set to take up all the primary processing for Hadoop workloads in the future. Being way faster and easier to program than MapReduce, Spark is now among the top-level Apache projects.

- The number of companies that are using Spark or are planning the same has exploded over the last year. There is a massive surge in the popularity of Spark, the reason being its matured open-source components and an expanding community of users.

- There is a huge demand for Spark Professionals and the demand for them is increasing.

You just need 4GB RAM to learn Spark.

Windows 7 or higher OS

i3 or higher processor

Mentors Pool training is intended to enable you to turn into an effective Apache Spark developer. After learning this course, you can acquire skills like-

- Write Scala Programs to build Spark Application

- Master the concepts of HDFS

- Understand Hadoop 2.x Architecture

- Understand Spark and its Ecosystem

- Implement Spark operations on Spark Shell

- Implement Spark applications on YARN (Hadoop)

- Write Spark Applications using Spark RDD concepts

- Learn data ingestion using Sqoop

- Perform SQL queries using Spark SQL

- Implement various machine learning algorithms in Spark MLlib API and Clustering

- Explain Kafka and its components

Top Companies Using Spark

- Microsoft

Including Spark support to Azure HDInsight (its cloud-hosted version of Hadoop).

- IBM

To manage its SystemML machine learning algorithm construction, IBM uses Spark technology.

- Amazon

To run Spark apps developed in Scala, Java, and Python, Amazon uses Apache Spark.

- Yahoo!

Yahoo used to have the origin in Hadoop for analyzing big data. Nowadays, Apache Spark is the next cornerstone.

Apart from them many more names like:

- Conviva

- Netflix

- Oracle

- Hortonworks

- Cisco

- Verizon

- Visa

- Databricks

- Amazon

- Accenture PLC

- Paxata

- DataStax, Inc.

- UC Berkeley AMPLab

- TripAdvisor

- Samsung Research America

- Shopify

- Premise

- Quantifind

- Radius Intelligence

- OpenTable

- Hitachi Solutions

- The Hive

- IBM Almaden

- eBay!

- Bizo and many more

- Apache Spark is the go-to tool for Data Science at scale. It is an open source, distributed compute platform which is the first tool in the Data Science toolbox which is built specifically with Data Science in mind.

- Spark is different from the myriad other solutions to this problem because it allows Data Scientists to develop simple code to perform distributed computing, and the functionality available in Spark is growing at an incredible rate.

- Much has been made in the Data Science community around Spark’s ability to train Machine Learning models at scale, and this is a key benefit, but the real value comes from being able to put an entire analytics pipeline into Spark, right from the data ingestion and ETL processes, through the data wrangling and feature engineering processes through to training and execution of models.

- What’s more, with Spark streaming and graphx Spark can provide a much more complete analytics solution.

Get yourself registered in any of the training institutes that provides Apache Spark and Scala certification. Participate and get certified.