Big Data and Hadoop Online Training

Big Data Hadoop training program helps you master Big Data Hadoop and Spark to get ready for the Cloudera CCA Spark and Hadoop Developer Certification (CCA175) exam as well as master Hadoop Administration with 14 real-time industry-oriented case-study projects. In this Big Data course, you will master MapReduce, Hive, Pig, Sqoop, Oozie and Flume and work with Amazon EC2 for cluster setup, Spark framework and RDD, Scala and Spark SQL, Machine Learning using Spark, Spark Streaming, etc.

In collaboration with

Online Class

Projects

Hands-On

n/a

60 Hrs Instructor-led Training

Mock Interview Session

Project Work & Exercises

Flexible Schedule

24 x 7 Lifetime Support & Access

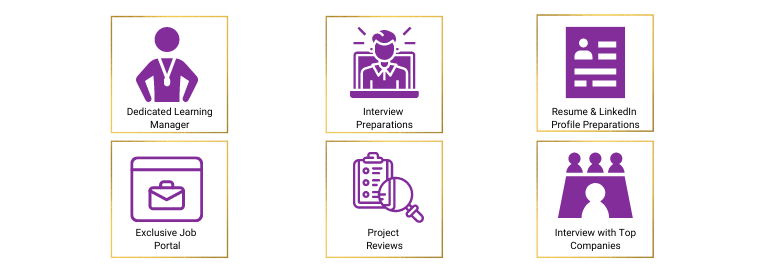

Certification and Job Assistance

Course Benefits

Big Data and Hadoop Online Training Course Overview

1. Learn the fundamentals

2. Efficient data extraction

3. MapReduce

4. Debugging techniques

5. Hadoop frameworks

6. Real-world analytics

- Complete beginners who want to learn Big Data Hadoop

- Professionals who want to go for Big Data Hadoop Certifications

Before undertaking a Big Data and Hadoop course, a candidate is recommended to have a basic knowledge of programming languages like Python, Scala, Java and a better understanding of SQL and RDBMS.

Mentors Pool follows a rigorous certification process. To become a certified Big data Hadoop , you must fulfill the following criteria:

- Online Instructor-led Course:

- Successful completion of all projects, which will be evaluated by trainers

- Scoring a minimum of 60 percent in the Big Data Hadoop quiz conducted by Mentors Pool

Big Data has a major impact on businesses worldwide, with applications in a wide range of industries such as healthcare, insurance, transport, logistics, and customer service. A role as a Big Data Engineer places you on the path to an exciting, evolving career that is predicted to grow sharply into 2025 and beyond.

This co-developed Mentors Pool and IBM Big Data Engineer certification training is designed to give you in-depth knowledge of the flexible and versatile frameworks on the Hadoop ecosystem and big data engineering tools like Data Model Creation, Database Interfaces, Advanced Architecture, Spark, Scala, RDD, SparkSQL, Spark Streaming, Spark ML, GraphX, Sqoop, Flume, Pig, Hive, Impala, and Kafka Architecture. This program will also teach you to model data, perform ingestion, replicate data, and share data using a NoSQL database management system MongoDB.

The Big Data Engineer course curriculum will give you hands-on experience connecting Kafka to Spark and working with Kafka Connect.

Talk to Us

IN: +91-8197658094

According to Forbes Big Data & Hadoop Market is expected to reach $99.31B by 2022 growing at a CAGR of 42.1% from 2015. McKinsey predicts that by 2018 there will be a shortage of 1.5M data experts. According to Indeed Salary Data, the Average salary of Big Data Hadoop Developers is $135k

Fees

Online Classroom

- 60 Hrs of Instructor-led Training

- 1:1 Doubt Resolution Sessions

- Attend as many batches for Lifetime

- Flexible Schedule

Batches

Dates

Days

Timings

Enrolment validity: Lifetime

Login

EMI Option Available with different credit cards

Cart

Corporate Training

- Customised Learning

- Enterprise grade learning management system (LMS)

- 24x7 Support

- Enterprise grade reporting

Course Content

First Topic of the course

Lessson 1 of Azure Certification course

Big Data and Hadoop Online Training Course Content

Learning objectives:

This module will introduce you to the various concepts of big data analytics, and the seven Vs of big data—Volume, Velocity, Veracity, Variety, Value, Vision, and Visualization. Explore big data concepts, platforms, analytics, and their applications using the power of Hadoop 3.

Topics:

- Understanding Big Data

- Types of Big Data

- Difference between Traditional Data and Big Data

- Introduction to Hadoop

- Distributed Data Storage In Hadoop, HDFS and Hbase

- Hadoop Data processing Analyzing Services MapReduce and spark, Hive Pig and Storm

- Data Integration Tools in Hadoop

- Resource Management and cluster management Services

Hands-on: No hands-on

Learning Objectives:

Here you will learn the features in Hadoop 3.x and how it improves reliability and performance. Also, get introduced to MapReduce Framework and know the difference between MapReduce and YARN.

Topics:

- Need of Hadoop in Big Data

- Understanding Hadoop And Its Architecture

- The MapReduce Framework

- What is YARN?

- Understanding Big Data Components

- Monitoring, Management and Orchestration Components of Hadoop Ecosystem

- Different Distributions of Hadoop

- Installing Hadoop 3

Hands-on: Install Hadoop 3.x

Learning Objectives: Learn to install and configure a Hadoop Cluster.

Topics:

- Hortonworks sandbox installation & configuration

- Hadoop Configuration files

- Working with Hadoop services using Ambari

- Hadoop Daemons

- Browsing Hadoop UI consoles

- Basic Hadoop Shell commands

- Eclipse & winscp installation & configurations on VM

Hands-on: Install and configure eclipse on VM

Learning Objectives:

Learn about various components of the MapReduce framework, and the various patterns in the MapReduce paradigm, which can be used to design and develop MapReduce code to meet specific objectives.

Topics:

- Running a MapReduce application in MR2

- MapReduce Framework on YARN

- Fault tolerance in YARN

- Map, Reduce & Shuffle phases

- Understanding Mapper, Reducer & Driver classes

- Writing MapReduce WordCount program

- Executing & monitoring a Map Reduce job

Hands-on :Use case – Sales calculation using M/R

Learning Objectives:

Learn about Apache Spark and how to use it for big data analytics based on a batch processing model. Get to know the origin of DataFrames and how Spark SQL provides the SQL interface on top of DataFrame.

Topics:

- SparkSQL and DataFrames

- DataFrames and the SQL API

- DataFrame schema

- Datasets and encoders

- Loading and saving data

- Aggregations

- Joins

Hands-on:

Look at various APIs to create and manipulate DataFrames and dig deeper into the sophisticated features of aggregations, including groupBy, Window, rollup, and cubes. Also look at the concept of joining datasets and the various types of joins possible such as inner, outer, cross, and so on

Learning Objectives:

Understand the concepts of the stream-processing system, Spark Streaming, DStreams in Apache Spark, DStreams, DAG and DStream lineages, and transformations and actions.

Topics:

- A short introduction to streaming

- Spark Streaming

- Discretized Streams

- Stateful and stateless transformations

- Checkpointing

- Operating with other streaming platforms (such as Apache Kafka)

- Structured Streaming

Hands-on: Process Twitter tweets using Spark Streaming

Learning Objectives:

Learn to simplify Hadoop programming to create complex end-to-end Enterprise Big Data solutions with Pig.

Topics:

- Background of Pig

- Pig architecture

- Pig Latin basics

- Pig execution modes

- Pig processing – loading and transforming data

- Pig built-in functions

- Filtering, grouping, sorting data

- Relational join operators

- Pig Scripting

- Pig UDF’s

Learning Objectives:

Learn about the tools to enable easy data ETL, a mechanism to put structures on the data, and the capability for querying and analysis of large data sets stored in Hadoop files.

Topics:

- Background of Hive

- Hive architecture

- Hive Query Language

- Derby to MySQL database

- Managed & external tables

- Data processing – loading data into tables

- Hive Query Language

- Using Hive built-in functions

- Partitioning data using Hive

- Bucketing data

- Hive Scripting

- Using Hive UDF’s

Learning Objectives:

Look at demos on HBase Bulk Loading & HBase Filters. Also learn what Zookeeper is all about, how it helps in monitoring a cluster & why HBase uses Zookeeper.

Topics:

- HBase overview

- Data model

- HBase architecture

- HBase shell

- Zookeeper & its role in HBase environment

- HBase Shell environment

- Creating table

- Creating column families

- CLI commands – get, put, delete & scan

- Scan Filter operations

Learning Objectives:

Learn how to import and export data between RDBMS and HDFS.

Topics:

- Importing data from RDBMS to HDFS

- Exporting data from HDFS to RDBMS

- Importing & exporting data between RDBMS & Hive tables

Learning Objectives:

Understand how multiple Hadoop ecosystem components work together to solve Big Data problems. This module will also cover Flume demo, Apache Oozie Workflow Scheduler for Hadoop Jobs.

Topics:

- Overview of Oozie

- Oozie Workflow Architecture

- Creating workflows with Oozie

- Introduction to Flume

- Flume Architecture

- Flume Demo

Learning Objectives:

Learn to constantly make sense of data and manipulate its usage and interpretation; it is easier if we can visualize the data instead of reading it from tables, columns, or text files. We tend to understand anything graphical better than anything textual or numerical.

Topics:

- Introduction

- Tableau

- Chart types

- Data visualization tools

Hands-on: Use Data Visualization tools to create a powerful visualization of data and insights.

Course Projects

Analysis of Aadhar

Aadhar card Database is the largest biometric project of its kind currently in the world. The Indian government needs to analyse the database, divide the data state-wise and calculate how many people are still not registered, how many cards are approved and how they can bifurcate it according to gender, age, location, etc. For analyzing purposes the data storage must be in distributed storage rather than traditional database system. As a solution for this, Hadoop has been used where the analyzing is done by using pig Latin. The benefit of using pig Latin is that it requires much fewer lines of code which reduces overall time needed for development and testing of code. The results are then analyzed graphically.

Hadoop Web Log Analytics

Derive insights from web log data. The project involves the aggregation of log data, implementation of Apache Flume for data transportation, and processing of data and generating analytics. You will learn to use workflow and data cleansing using MapReduce, Pig, or Spark.

Analyzing in Banking Sector (CITI Bank)

The Citi group of banks is one of the world’s largest providers of financial services, In recent years, they adopted a fully Big Data-driven approach to drive business growth and enhance the services provided to customers because traditional systems are not able to handle the huge amount of data pouring in. Using Hadoop, they will be storing and analyzing banking data to come up with multiple insights. The platform is primarily built on Hadoop and datasets are sourced from between different applications that ingest multi-structured data streams from transactional stores, customer feedback, and business process data sources. With the help of Hadoop platform they can perform analyses like Fraud detection, Fine-tuning with their customer segmentation.

Course Certification

The Big Data Analytics course sets you on your path to become an expert in Big Data Analytics by understanding its core concepts and learning the involved technologies. Most of the courses will also involve you working on real-time and industry-based projects. Through an intensive training program, you will learn the practical applications of the field.

Today, the job market is saturated and there is immense competition. Without any specialization, chances are that you will not be considered for the job you are aspiring for.

Big Data Hadoop is used across enterprises in various industries and the demand for Hadoop professionals is bound to increase in the future. Certification is a way of letting recruiters know that you have the right Big Data Hadoop skills they are looking for. With top corporations bombarded with tens of thousands of resumes for a handful of job postings, a Hadoop certification helps you stand out from the crowd. A Certified Hadoop Administrator also commands a higher pay in the market with an average annual income of $123,000. Hadoop certifications can thus propel your career to the next level.

If you are looking for the best Hadoop certification, here are a few tips that can help you decide the same:

- Spend some time researching different courses on the internet that provide Big Data Hadoop certifications. Read about them in detail and shortlist the courses that can augment your career in the best way. You need to find a training provider that offers live, interactive classes from industry experts.

- There are several providers offering the certification, but not all of them will help you. Some companies will be providing recorded sessions. What you want is an advanced learning platform that offers industry-relevant courses and an opportunity to interact like with the faculty. So, before selecting a course, find out the resources they are offering, the learning platform, and the mode of delivery.

- Find out the accreditation and credibility of the training provider. Most of them have affiliations with private universities for certifications.

- Every course has a duration and cost. You should be able to manage the course with your job or your study. Also, you need enough time for making sure that you take all the classes.

Undergoing training in Hadoop and big data is quite advantageous to the individual in this data-driven world:

- Enhance your career opportunities as more organizations work with big data

- Professionals with good knowledge and skills in Hadoop are in demand across various industries

- Improve your salary with a new skill-set. According to ZipRecruiter, a Hadoop professional earns an average of $133,296 per annum

- Secure a position with leading companies like Google, Microsoft, and Cisco with skills in Hadoop and big data

The Hadoop certification from KnowledgeHut costs INR 24,999 in India and $1199 in the US.

Today, the job market is saturated and there is immense competition. Without any specialization, chances are that you will not be considered for the job you are aspiring for.

Big Data Hadoop is used across enterprises in various industries and the demand for Hadoop professionals is bound to increase in the future. Certification is a way of letting recruiters know that you have the right Big Data Hadoop skills they are looking for. With top corporations bombarded with tens of thousands of resumes for a handful of job postings, a Hadoop certification helps you stand out from the crowd. A Certified Hadoop Administrator also commands a higher pay in the market with an average annual income of $123,000. Hadoop certifications can thus propel your career to the next level.

Certification Course Reviews

Certification Course FAQs

Hadoop has now become the de facto technology for storing, handling, evaluating and retrieving large volumes of data. Big Data analytics has proven to provide significant business benefits and more and more organizations are seeking to hire professionals who can extract crucial information from structured and unstructured data. Mentors Pool brings you a full-fledged course on Big Data Analytics and Hadoop development that will teach you how to develop, maintain and use your Hadoop cluster for organizational benefit.

After completing our course, you will be able to understand:

- What is Big Data, its need and applications in business

- The tools used to extract value from Big data

- The basics of Hadoop including fundamentals of HDFs and MapReduce

- Navigating the Hadoop Ecosystem

- Using various tools and techniques to analyse Big Data

- Extracting data using Pig and Hive

- How to increase sustainability and flexibility across the organization’s data sets

- Developing Big Data strategies for promoting business intelligence

Though not required, it is recommended that you have basic programming knowledge when embarking on a career in big data

If you already satisfy the requirements for learning Hadoop, it will take you a few days or weeks to master the topic. However, if you are learning from scratch, it can easily take 2 to 3 months for learning Hadoop. In such cases, it is strongly recommended that you enrol in Big Data Hadoop Training.

Yes, CCA certifications are valid for two years. CCP certifications are valid for three years

The Cloudera CCA 175 exam requires you to have a computer, a webcam, Chrome or Chromium browser, and a good internet connection. For a full set of requirements, you can visit https://www.examslocal.com/ScheduleExam/Home/CompatibilityCheck